Introduction

The NVIDIA Tesla A100 80GB is a powerhouse in AI acceleration, deep learning, and high-performance computing (HPC). With industries like autonomous driving evolving rapidly, the A100 plays a critical role in training complex neural networks and managing massive datasets. However, the real question is: how does it compare to its closest competitors, such as the AMD Instinct MI250, Google TPU v4, and even NVIDIA’s own RTX 4090? In this article, we’ll break down the strengths, performance metrics, and ideal use cases of these GPUs in AI, deep learning, and enterprise environments.

At Server Tech Central, we provide enterprise-grade AI hardware solutions, empowering businesses with high-performance GPUs to scale their data centers and AI workloads efficiently. Let’s explore whether the NVIDIA Tesla A100 80GB stands as the leading choice or if other contenders offer a better alternative.

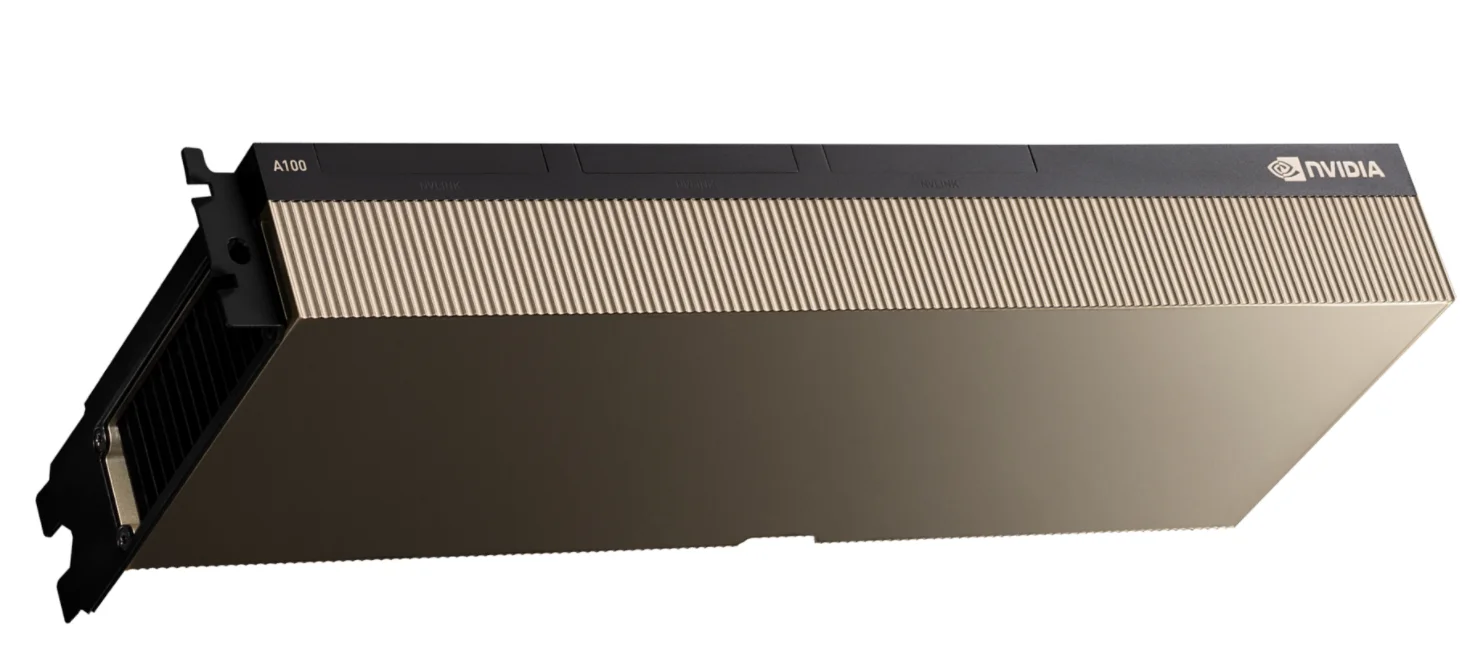

1. NVIDIA Tesla A100 80GB: A Game-Changer for AI & Data Centers

The NVIDIA Tesla A100 80GB is designed to handle demanding AI computations with ease. With features like Multi-Instance GPU (MIG) and Tensor Cores, it significantly enhances performance for deep learning, AI model training, and cloud computing.

Key Features:

-

80GB HBM2e Memory – Enables seamless data processing for large AI models.

-

Multi-Instance GPU (MIG) – Optimizes resource allocation for cloud computing and AI applications.

-

Tensor Core Acceleration – Provides industry-leading performance in AI-driven workloads.

Use Cases:

-

Autonomous Driving: Tesla utilizes the A100 to train AI models for self-driving technology.

-

Enterprise AI & HPC: Popular in AI research labs, data centers, and institutions focused on large-scale AI model training.

-

Cloud-Based AI Processing: Integrated into AWS, Google Cloud, and Microsoft Azure for advanced AI workloads.

2. NVIDIA A100 vs. AMD Instinct MI250: Which One Leads AI Acceleration?

Verdict:

While the AMD Instinct MI250 offers higher FP64 performance, the A100 stands out due to its Tensor Core technology, which dramatically accelerates deep learning tasks. The Multi-Instance GPU (MIG) feature also makes it more efficient for AI and cloud-based environments.

3. NVIDIA A100 vs. Google TPU v4: AI Cloud Battle

Verdict:

The A100 offers greater flexibility across various AI applications, making it a strong choice for data scientists and enterprises. On the other hand, Google TPU v4 is ideal for businesses that exclusively use TensorFlow for AI model training.

4. Why Choose Server Tech Central for AI Hardware Solutions?

At Servertechcentral, we specialize in cutting-edge GPUs for AI, HPC, and cloud computing, ensuring businesses have access to high-performance solutions tailored to their needs.

Why Buy from Us?

✅ 100% Genuine NVIDIA A100 GPUs – All products come with a manufacturer’s warranty. ✅ Expert Consultation – Get professional guidance for AI, deep learning, and HPC infrastructure. ✅ Competitive Pricing – Exclusive deals for bulk orders and enterprise solutions.

Final Thoughts: Is the NVIDIA A100 80GB the Best AI GPU?

For businesses focused on deep learning, AI model training, and autonomous driving, the NVIDIA Tesla A100 80GB remains a top-tier choice. While competitors like the AMD Instinct MI250 and Google TPU v4 provide compelling alternatives, NVIDIA’s Tensor Core technology gives the A100 a significant edge in AI acceleration.